- Snowflake Edition

- Types of snowflake editions

- Standard edition

- Enterprise edition

- Business critical edition

- Virtual private snowflake

- Final thought

Snowflake Edition:

The snowflake edition that your organization chooses to determine the unit costs for the credits and the data storage you use. Other factors that impact the unit costs are the region where the snowflake edition is situated and to differentiate between on demand and capacity account.

Let us define the terms “on-demand” and “capacity account”.

On demand: on demand is usage-based pricing that doesn’t require a long-term license.

Capacity: it is nothing but discounted pricing that is based on an upfront capacity commitment.

Become a Snowflake Certified professional by learning this HKR Snowflake Training !

Types of snowflake editions:

In this section, we are going to explain the various Snowflake editions:

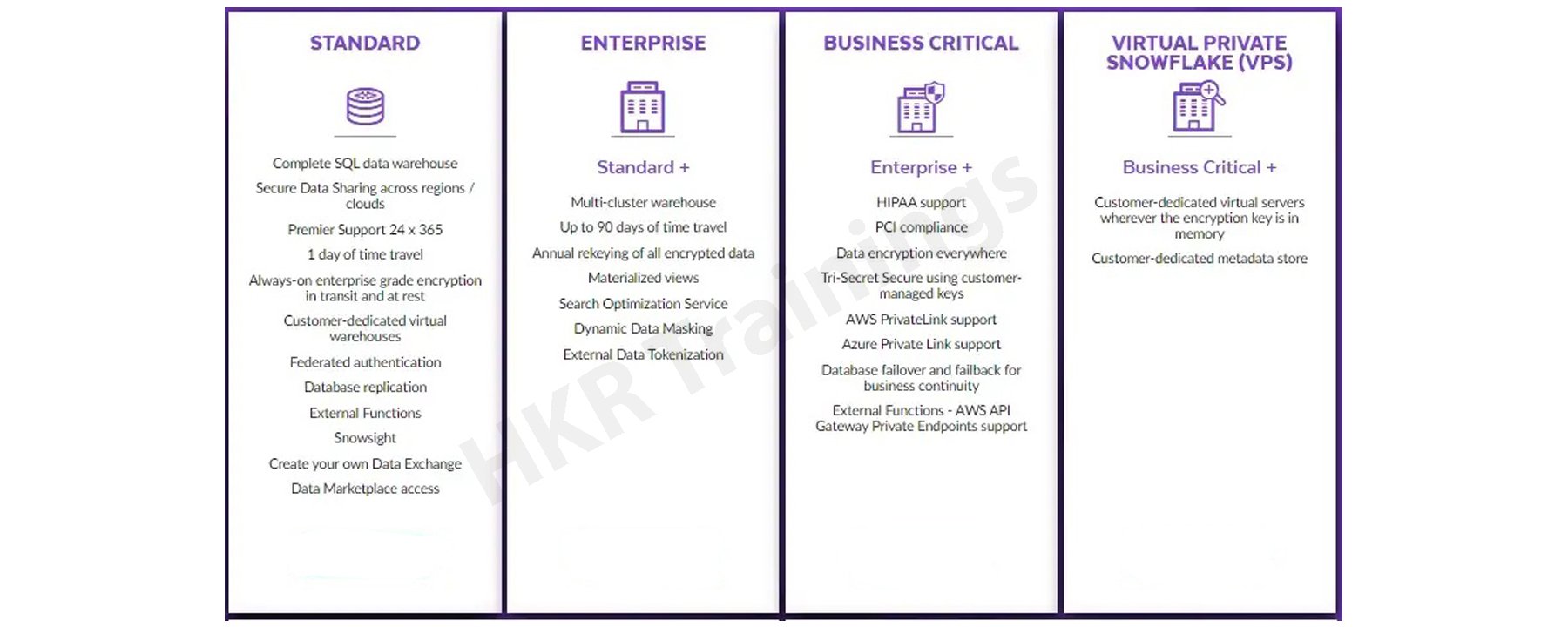

Snowflake offers four different editions, each providing progressively more features:

There are mainly four types of snowflake editions, they are;

- Standard edition

- Enterprise edition

- Business critical edition

- Virtual private snowflake

The following image illustrates the types of snowflake editions and their features;

Top 30 frequently asked Snowflake Interview Questions

1. Standard edition:

Standard edition is an introductory level edition that provides full and unlimited access to all the snowflake features. This type of edition also provides a strong balance between features, level of support, and cost.

Features of Standard edition:

Release management:

No release management for the Snowflake standard edition because it is a free version to use.

Security, Governance, and data protection:

- SOC Type Ⅱ certification.

- Federated accountants and single sign-on (SSO) for centralizing and streaming user authentication.

- OAuth for authorizing account access without sharing or storing user login credentials.

- Network policies for limiting/controlling site access by user IP address.

- Automatic encryption of all data.

- Support for multiple face authentication.

- Object-level access control.

- Standard time travel (up to 1 day) for accessing/restoring modified and deleted data.

- Disaster recovery of modified or deleted data (for 7 days beyond time travel ) through fail-safe.

SQL support:

- Standard SQL includes the most DDL and DML defined in SQL 1999.

- Advanced DML such as multi-table INSERT, MERGE, and multi-merge.

- Broad support for standard data types.

- Native support for semi-structured data (JSON, Avro, ORC, parquet, and XML).

- Native support for geospatial data.

- Native support for unstructured data.

- Collation rules for string/text data in the table column.

- Integrity constraints on table column for international and modeling purposes.

- Multi-statement transactions.

- User-defined functions (UDFs) with support for SQL, JavaScript, and Java.

- External functions for extending snowflake to other development platforms.

- AWS private endpoints for external functions.

- JavaScript-based stored procedures.

- External table for referencing data in a cloud storage data lake.

- Support for clustering data in a very large table to improve query performance, with automatic maintenance of clustering.

Interface and Tools:

- Full-featured web-based interface with native SQL worksheet capabilities.

- Snowsight, the next-generation SQL worksheet for advanced query development, data analysis, and visualization.

- SnowSQL, a command line for building/testing queries, loading/unloading bulk data, and automating DDL operations.

- Snow CD, a command-line diagnostic tool for identifying and fixing client connectivity issues.

- Programmatic interfaces for python, spark, Node.js, and Go.

- Native support for JDBC, and ODBC.

- Extensive ecosystem for connecting to ETL, BI, and other third-party vendors and technologies.

- SnowFlake partner connects for initiating free software/service trails with a growing network of partners in the Snowflake ecosystem.

- Snowpark, a library that provides an intuitive API for querying and processing data in a data pipeline.

Data import and export:

- Bulk loading from delimited flat files (CSV, TSV, etc) and semi-structured data files (JSON, Avro, ORC, Parquet, and XML).

- Bulk unloading to delimited flat files and JSON files.

- Snowpipe for continuous micro-batch loading.

- Snowflake Connector for Kafka to load the data from Apache Kafka topics.

Data pipeline:

- Streams for tracking table changes.

- Tasks for scheduling the execution of SQL statements often in conjunction with table streams.

Data Replication and failover:

- Database replication between the Snowflake accounts (within an organization) to keep the database objects and stored data synchronized.

- Database Failover and Failback between Snowflake accounts for Business Continuity and disaster recovery.

Data sharing:

- As a data provider, securely share data with other accounts.

- As a data consumer, query data is shared with your account by data providers.

- Secure data sharing across the regions, and cloud platforms (through data replication).

- Snowflake data marketplace, where providers and consumers meet to secure sharing data.

- Data exchange, a private hub of administrators, providers, and consumers that you might invite to securely collaborate with data.

Customer support:

- Snowflake community, snowflake’s online knowledge base, and support portal (for logging and tracking snowflake support tickets).

- Premier support, which includes 24/7 coverage and a 1-hour response window for severity 1 issues.

Top 30 frequently asked Snowflake Interview Questions !

Snowflake Training

- Master Your Craft

- Lifetime LMS & Faculty Access

- 24/7 online expert support

- Real-world & Project Based Learning

2. Enterprise edition:

Enterprise edition also provides the same features as the standard edition, with additional features which are specially designed to support large-scale business enterprises.

Features of Enterprise edition:

Release management:

24-hour early access to weekly new releases, which can be used for additional testing/validation before each release is deployed to your production accounts.

Security, governance, and data protection:

- SOC2 Type Ⅱ certifications.

- Federated authentication and SSO for centralizing and streamlining user authentication.

- OAuth for authorizing account access without sharing or storing user login credentials.

- Network policies for limiting/ controlling site access by user IP address.

- Automatic access of all data types.

- Support for multi-factor authentication.

- Object-level access control.

- Standard time travel (up to 1 day) for accessing/restoring modified and deleted data.

- Disaster recovery of modified/deleted data (for 7 days beyond time travel) through fail-safe.

- Extended time travel (up to 90 days).

- Periodic rekeying of encrypted data for data protection.

- Column-level security to apply masking policies to columns in the tables or views.

- Row access policies to apply now access policies to determine which rows are visible in a query result.

- Object training to apply tags to snowflake objects to facilitate tracking sensitive data and resource usage.

Compute resource management:

- Virtual warehouse, separate compute clusters for isolating quarry and data loading workloads.

- Resource monitors for monitoring virtual warehouse credit usage.

- Multi-cluster virtual warehouses for scaling compute resources to meet concurrency needs.

SQL support:

- Standard SQL, including most DDL and DML, defined in SQL:1999

- Advanced DML such as multi-table INSERT, MERGE, and multi-merge.

- Broad support for standard data types.

- Native support for semi-structured data (JSON, Avro, ORC, Parquet, and XML).

- Native support for geospatial data.

- Native support for unstructured data.

- Collation rules for string/text data in table columns.

- Integrity constraints (Not enforced) on table column for informational and modeling purposes.

- Multi-statement transactions.

- User-defined functions (UDFs) with support for SQL, Javascript, and Java.

- External functions for extending snowflake to other development platforms.

- AWS private endpoints for external functions.

- JavaScript-based stored procedures.

- External tables for referencing data in a cloud storage data lake.

- Support for clustering data in very large tables to improve query performance with automatic maintenance of clustering.

- Search optimization for point lookup queries with automatic maintenance.

- Materialized views, with automatic maintenance of results.

Interfaces and tools:

- Full-featured web-based interface with native SQL worksheets capabilities.

- Snowsight, the next-generation SQL worksheet for advanced query development, data analysis, and visualization.

- SnowSQL, a command-line client for building/testing queries, loading/unloading bulk data, and automating DDL operations.

- SnowCD, a command line diagnostic tool for identifying and fixing client connectivity issues.

- Programmatic interfaces for Python, Spark, Node.JS, and Go.

- Native support for JDBC and ODBC.

- Extensive ecosystem for connecting to ETL, BI, and other third-party vendors and technologies.

- Snowflake partner connects for initiating free software/service trials with a growing network of partners in the snowflake ecosystem.

- Snowpark, a library that provides an intuitive API for querying and processing data in a data pipeline.

Data import and export:

- Bulk loading from delimited flat files (CSV, TSV, etc) and semi-structured data files (JSON, Avro, ORC, Parquet, and XML).

- Bulk unloading to delimited flat files and JSON files.

- Snowpipe for continuous micro-batch loading.

- Snowflake Connector for Kafka for loading data from Apache Kafka topics.

Data pipelines:

- Steams for tracking table changes.

Tasks for scheduling the execution of SQL statements, often in conjunction with table stream.

Data replication and failover:

- Database replication between snowflake accounts (within an organization) to keep the database objects and stored data synchronized

Data sharing:

- As a data provider, securely share data with other accounts.

- As a data consumer, query data is shared with your account by data providers.

- Secure data sharing across regions, and cloud platforms (through data replication).

- Snowflake data marketplace where providers and consumers meet to securely share data.

- Data exchange, a private hub of administrators, providers, and consumers that you invite to securely collaborate around data.

Customer support:

- Snowflake community, snowflake’s online knowledge base, and support portal (for logging and tracking snowflake support tickets).

- Premier support, which includes 24/7 coverage and a 1-hour response window for severity 1 issues.

Get ahead in your career with our Snowflake Tutorial !

Subscribe to our YouTube channel to get new updates..!

3. Business critical edition:

Before it was known as “enterprise for sensitive data”, now we call it “Business critical edition”. The business-critical edition offers a higher level of data protection with the needs of an organization with extremely sensitive data, particularly PHI (protected health information) data that should comply with HIPAA (Health insurance probability and accountability act) and HItrust CSF regulations.

This edition also consists of the features same as Enterprise editions, additionally offers enhanced data security and data protection features.

Features of the business-critical edition:

Release management:

24 hours early access to weekly new releases which can be used for additional testing/ validation before each release is deployed to your production accounts.

Security, governance, and data protection:

- SOC2 Type Ⅱ certifications.

- Federated authentication and SSO for centralizing and streamlining user authentication.

- OAuth for authorizing account access without sharing or storing user login credentials.

- Network policies for limiting/ controlling site access by user IP address.

- Automatic access of all data types.

- Support for multi-factor authentication.

- Object-level access control.

- Standard time travel (up to 1 day) for accessing/restoring modified and deleted data.

- Disaster recovery of modified/deleted data (for 7 days beyond time travel) through fail-safe.

- Extended time travel (up to 90 days).

- Periodic rekeying of encrypted data for data protection.

- Column-level security to apply masking policies to columns in the tables or views.

- Row access policies to apply now access policies to determine which rows are visible in a query result.

- Object training to apply tags to snowflake objects to facilitate tracking sensitive data and resource usage.

- Customer-managed encryption keys through the Tri-secret secure.

- Support for PHI data (in accordance with HIPAA and HITRUST CSF regulations).

- Support for PCI DSS.

- Support for FedRAMP moderate data (in the government regions).

- Support for IRAP-protected (P) data i(in the Asia Pacific regions).

Compute resource management:

- Virtual warehouse, separate compute clusters for isolating quarry and data loading workloads.

- Resource monitors for monitoring virtual warehouse credit usage.

- Multi-cluster virtual warehouses for scaling compute resources to meet concurrency needs.

SQL support:

- Standard SQL, including most DDL and DML, defined in SQL:1999

- Advanced DML such as multi-table INSERT, MERGE, and multi-merge.

- Broad support for standard data types.

- Native support for semi-structured data (JSON, Avro, ORC, Parquet, and XML).

- Native support for geospatial data.

- Native support for unstructured data.

- Collation rules for string/text data in table columns.

- Integrity constraints (Not enforced) on table column for informational and modeling purposes.

- Multi-statement transactions.

- User-defined functions (UDFs) with support for SQL, Javascript, and Java.

- External functions for extending snowflake to other development platforms.

- AWS private endpoints for external functions.

- JavaScript-based stored procedures.

- External tables for referencing data in a cloud storage data lake.

- Support for clustering data in very large tables to improve query performance with automatic maintenance of clustering.

- Search optimization for point lookup queries with automatic maintenance.

- Materialized views, with automatic maintenance of results.

Interfaces and tools:

- Full-featured web-based interface with native SQL worksheets capabilities.

- Snowsight, teh next-generation SQL worksheet for advanced query development, data analysis, and visualization.

- SnowSQL, a command-line client for building/testing queries, loading/unloading bulk data, and automating DDL operations.

- SnowCD, a command line diagnostic tool for identifying and fixing client connectivity issues.

- Programmatic interfaces for Python, Spark, Node.JS, and Go.

- Native support for JDBC and ODBC.

- Extensive ecosystem for connecting to ETL, BI, and other third-party vendors and technologies.

- Snowflake partner connects for initiating free software/service trials with a growing network of partners in the snowflake ecosystem.

- Snowpark, a library that provides an intuitive API for querying and processing data in a data pipeline.

Data import and export:

- Bulk loading from delimited flat files (CSV, TSV, etc) and semi-structured data files (JSON, Avro, ORC, Parquet, and XML).

- Bulk unloading to delimited flat files and JSON files.

- Snowpipe for continuous micro-batch loading.

- Snowflake Connector for Kafka for loading data from Apache Kafka topics.

Data pipelines:

- Steams for tracking table changes.

- Tasks for scheduling the execution of SQL statements, often in conjunction with table stream.

Data replication and failover:

- Database replication between snowflake accounts (within an organization) to keep the database objects and stored data synchronized.

- Database failover and failback between snowflake account for business continuity and disaster recovery.

Data sharing:

- As a data provider, securely share data with other accounts.

- As a data consumer, query data is shared with your account by data providers.

- Secure data sharing across regions, and cloud platforms (through data replication).

- Snowflake data marketplace where providers and consumers meet to securely share data.

- Data exchange, a private hub of administrators, providers, and consumers that you invite to securely collaborate

Customer support:

- Snowflake community, snowflake’s online knowledge base, and support portal (for logging and tracking snowflake support tickets).

- Premier support, which includes 24/7 coverage and a 1-hour response window for severity 1 issues.

Become a Snowflake Certified professional by learning this HKR Snowflake Training in Hyderabad !

4. Virtual private snowflake (VPS) edition:

Virtual private snowflakes offer the highest level security features for organizations that have the strictest requirements such as financial industries (banks, FinTech, and etc) and other large enterprises where they need to collect, analyze, and share highly sensitive data.

Features of the Virtual private Snowflake(VP):

Release management:

24 hours early access to weekly new releases which can be used for additional testing/ validation before each release is deployed to your production accounts.

Security, governance, and data protection:

- SOC2 Type Ⅱ certifications.

- Federated authentication and SSO for centralizing and streamlining user authentication.

- OAuth for authorizing account access without sharing or storing user login credentials.

- Network policies for limiting/ controlling site access by user IP address.

- Automatic access of all data types.

- Support for multi-factor authentication.

- Object-level access control.

- Standard time travel (up to 1 day) for accessing/restoring modified and deleted data.

- Disaster recovery of modified/deleted data (for 7 days beyond time travel) through fail-safe.

- Extended time travel (up to 90 days).

- Periodic rekeying of encrypted data for data protection.

- Column-level security to apply masking policies to columns in the tables or views.

- Row access policies to apply now access policies to determine which rows are visible in a query result.

- Object training to apply tags to snowflake objects to facilitate tracking sensitive data and resource usage.

- Customer-managed encryption keys through the Tri-secret secure.

- Support for PHI data (in accordance with HIPAA and HITRUST CSF regulations).

- Support for PCI DSS.

- Support for FedRAMP moderate data (in the government regions).

- Support for IRAP-protected (P) data i(in the Asia Pacific regions).

- Dedicated metadata store and pool of computer resources (used in virtual warehouses).

Compute resource management:

- Virtual warehouse, separate compute clusters for isolating quarry and data loading workloads.

- Resource monitors for monitoring virtual warehouse credit usage.

- Multi-cluster virtual warehouses for scaling compute resources to meet concurrency needs.

- Standard SQL, including most DDL and DML, defined in SQL:1999

- Advanced DML such as multi-table INSERT, MERGE, and multi-merge.

- Broad support for standard data types.

- Native support for semi-structured data (JSON, Avro, ORC, Parquet, and XML).

- Native support for geospatial data.

- Native support for unstructured data.

- Collation rules for string/text data in table columns.

- Integrity constraints (Not enforced) on table column for informational and modeling purposes.

- Multi-statement transactions.

- User-defined functions (UDFs) with support for SQL, Javascript, and Java.

- External functions for extending snowflake to other development platforms.

- AWS private endpoints for external functions.

- JavaScript-based stored procedures.

- External tables for referencing data in a cloud storage data lake.

- Support for clustering data in very large tables to improve query performance with automatic maintenance of clustering.

- Search optimization for point lookup queries with automatic maintenance.

- Materialized views with automatic maintenance of the results.

Interfaces and tools:

- Full-featured web-based interface with native SQL worksheets capabilities.

- Snowsight, teh next-generation SQL worksheet for advanced query development, data analysis, and visualization.

- SnowSQL, a command-line client for building/testing queries, loading/unloading bulk data, and automating DDL operations.

- SnowCD, a command line diagnostic tool for identifying and fixing client connectivity issues.

- Programmatic interfaces for Python, Spark, Node.JS, and Go.

- Native support for JDBC and ODBC.

- Extensive ecosystem for connecting to ETL, BI, and other third-party vendors and technologies.

- Snowflake partner connects for initiating free software/service trials with a growing network of partners in the snowflake ecosystem.

- Snowpark, a library that provides an intuitive API for querying and processing data in a data pipeline.

Data import and export:

- Bulk loading from delimited flat files (CSV, TSV, etc) and semi-structured data files (JSON, Avro, ORC, Parquet, and XML.

- Bulk unloading to delimited flat files and JSON files.

- Snowpipe for continuous micro-batch loading.

- Snowflake Connector for Kafka for loading data from Apache Kafka topics.

Data pipelines:

- Steams for tracking table changes.

- Tasks for scheduling the execution of SQL statements, often in conjunction with table stream.

Data replication and failover:

- Database replication between snowflake accounts (within an organization) to keep the database objects and stored data synchronized.

- Database failover and failback between snowflake account for business continuity and disaster recovery.

Customer support:

- Snowflake community, snowflake’s online knowledge base, and support portal (for logging and tracking snowflake support tickets).

- Premier support, which includes 24/7 coverage and a 1-hour response window for severity 1 issues.

Final thought:

The Snowflake Editions article explains the core concepts of the Snowflake data clouds such as Types of editions and different features. Snowflake data cloud is a popular cloud-based platform available in 4 editions formats like Standard, enterprise, business-critical, and Virtual private snowflake. Stay tuned for more articles that are related to the Snowflake data cloud.

Related Articles :

About Author

As a senior Technical Content Writer for HKR Trainings, Gayathri has a good comprehension of the present technical innovations, which incorporates perspectives like Business Intelligence and Analytics. She conveys advanced technical ideas precisely and vividly, as conceivable to the target group, guaranteeing that the content is available to clients. She writes qualitative content in the field of Data Warehousing & ETL, Big Data Analytics, and ERP Tools. Connect me on LinkedIn.

Upcoming Snowflake Training Online classes

| Batch starts on 27th Feb 2026 |

|

||

| Batch starts on 3rd Mar 2026 |

|

||

| Batch starts on 7th Mar 2026 |

|