- What is Meant by ELK Stack?

- What is Elasticsearch?

- What is Logstash?

- What is Kibana?

- Why use ELK Stack?

- Elasticsearch installation

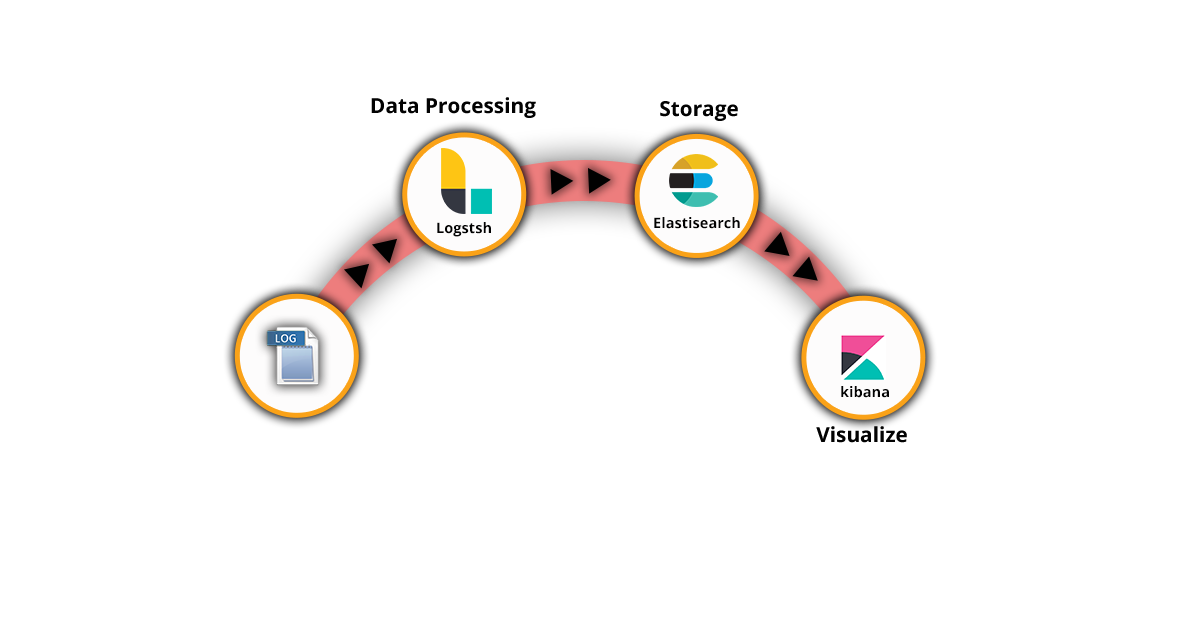

- ELK Stack Architecture

- Advantages and disadvantages of ELK Stack

What is Meant by ELK Stack?

In ELK Stack, ELK refers to Elasticsearch, Logstash, & Kibana, which are the core components. These three core products are used to conduct log analysis in various IT environments. ELK Stack is a popular open-source software and a suite of tools. It allows us to execute centralized logging to locate the issues in applications or web servers. Also, it will enable you to search all the log files in a single place.

Let us know about the three primary tools of ELK Stack:-

What is Elasticsearch?

Elasticsearch is one of the crucial components of ELK Stack and is an open-source, distributed analytics engine. It helps us search, analyze, and save large volumes of data. Elasticsearch is highly useful for validating apps that fulfil our search needs as a primary engine.

Generally, it works on the Lucene Search Engine and is mainly helpful for SPA projects.

Features

- Elasticsearch is a free-to-use text-search analytics engine.

- It is highly scalable and helpful for different indexing types of data.

- It analyzes large volumes of data faster in real-time.

- It can quickly achieve search responses.

- It supports multiple languages.

Advantages

- It is compatible with executing on different platforms due to being built on Java.

- Elasticsearch is capable of handling multi-tenancy.

- It provides faster search performance and helps to find the best match for the full-text search.

- Elasticsearch supports different languages and document types.

- It uses JSON as a style of serialization for documents.

Become a Elasticsearch Certified professional by learning Elasticsearch online course from hkrtrainings!Become a Elasticsearch Certified professional by learning Elasticsearch online course from hkrtrainings!Become a Elasticsearch Certified professional by learning Elasticsearch online course from hkrtrainings!

Become a Elasticsearch Certified professional by learning Elasticsearch online course from Hkr Trainings!

What is Logstash?

Logstash is a pipeline tool that helps to collect and forward the log data. It is an open-source tool and a data collection engine that combines data actively from different sources and regulates it into the stated locations. Input, filter, and output are the three essential elements of Logstash. Moreover, it helps to analyze logs and events in real time from multiple sources.

Features

- It helps gather data from various sources and send them to different locations.

- Logstash allows different types of inputs for the logs.

- Logstash supports a wide range of web servers.

- We can use Logstash to manage sensor data in IoT.

- It helps handle multiple logging data such as Apache, Windows Event Logs, etc.

Advantages

- It offers different types of plugins to parse data and modify the logging data into any format as required. Also, it executes data filtering.

- As a destination source for various logging events, it supports multiple databases, internet protocols, and other services.

- It is centralized, allowing it to collect data from multiple servers and process it quickly.

- Due to having several plugin support, it is flexible to use.

What is Kibana?

Kibana is a free-to-use data visualization tool that visualizes Ealsticsearch documents and helps developers to analyze these documents. It has a browser-based interface that allows for simplifying large data volumes. Further, it indicates the changes within the Elasticsearch queries. Moreover, we can conduct progressive data analysis using Kibana and visualize data in various formats like graphs, charts, etc. Let us know the features and benefits of this tool.

Kibana installation Go to Download Kibana and download the zip file that suits your operating system. Unzip the file to your designated installation path and run the following command,bin\kibana.batKibana will run on port 5601 by default. For more details about Kibana installation and usage, check out our Elasticsearch tutorial here.

Features

- Kibana is a powerful dashboard tool for the front-end that helps visualize indexed data from the elastic data cluster.

- It allows us to explore and visualize different types of data.

- It enables us to do real-time searches for the indexed data.

- Kibana offers multiple easy ways to visualize data.

- It allows users to use search queries and filters to get quick search results for a specific input through a dashboard.

Advantages

- It offers open-source browser-based data visualizations.

- Kibana is very simple to use and easy to learn for newbies.

- It helps to analyze extensive data of logs in different styles like charts, bars, graphs, etc.

- It allows us to save and manage different dashboards.

- Moreover, Kibana provides the easiest way to convert dashboards and data visuals into reports.

Why use ELK Stack?

The ELK Stack is a popular tool suite that helps build a reliable and flexible data-resolving environment. Enterprises with cloud-based infrastructure can benefit from the use of the ELK stack. It also helps to identify the following issues as well:-

ELK Stack helps collect and manage large-size unstructured data produced from different servers and apps. It is not possible to read by humans. Therefore, it transforms such data into meaningful assets that help to make many informed decisions. The ELK stack is generally an open-source platform that offers many cost-effective solutions for growing startups and established business entities. Moreover, it provides a powerful platform to monitor performance and security levels. Also, it ensures maximum uptime along with regulatory conformity.

Further, ELK Stack identifies the various gaps within the log files. It can accurately parse data from different sources into a highly scalable central database. Elastic stack also considers both historical and real-time analysis of data. You can also conduct troubleshooting much faster.

Thus, using ELK stack allows you to analyze log data, build data visuals for apps, monitor performance, and conduct security analysis. You can get these benefits from using this popular platform.

Elasticsearch Installation

To install Elasticsearch, we need to follow the below mentioned steps:-

Step (1) Before we install Elasticsearch, we must download and install Java on the system. Without Java, Elasticsearch cannot run properly. If Java is already installed, you need to check the version that should be 11 or higher. To check the Java version on Windows and Linux OS, run the below commands respectively on the CMD-

java -version

echo $JAVA_HOME

If the latest version of Java is not installed on the system, we must download it and run it on the Windows OS. Also, we need to set up the Java Environment Variable.

Step (2) We can download and install Elastic search based on the operating system. We can download the Zip file available for each OS. Such as Windows OS, UNIX OS, etc.

Step (3) All we need to do is unzip or open the zip file to install Elasticsearch in the OS of Windows. In the case of UNIX OS, we need to extract the tar file to install it. Here is the process to install Elasticsearch

$wget https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch7.0.0-Linux-x86_64.tar.gz

$tar -xzf elasticsearch-7.0.0-Linux-x86_64.tar.gz

Now, we will install the "Public Signing Key" for the Linux OS through the APT utility.

$ wget -qo - https://artifacts.elastic.co/ GPG-KEY-elasticsearch | sudo

apt-key add

Then we have to save the storage definition given as follows:-

$ echo "deb https://artifacts.elastic.co/ packages/7.x/apt stable main" | sudo tee -a /etc/apt/sources.list.d/elastic-7.x.list

Now perform the update by entering the following command.

$ sudo apt-get update

Then, we can install Elasticsearch using the following command-

$ sudo apt-get install elasticsearch

Install the Debian package by downloading manually with the below command-

$wget https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch7.0.0-amd64.deb

$sudo dpkg -i elasticsearch-7.0.0-amd64.deb0

We also use the utility called YUM for Debian Linux Operating System.

Thus, we have to install the Public Signing Key here again.

$ rpm --import https://artifacts.elastic.co/GPG-KEY-elasticsearch

Add the below text within a file using the suffix .repo to the directory:-

elasticsearch-7.x]

name=Elasticsearch repository for 7.x packages

baseurl=https://artifacts.elastic.co/packages/7.x/yum

gpgcheck=1

gpgkey=https://artifacts.elastic.co/GPG-KEY-elasticsearch

enabled=1

autorefresh=1

type=rpm-MD

We can now install Elasticsearch by entering the following code-

sudo yum install elasticsearch

Step (4) Reach out to the main directory and the bin folder of Elasticsearch. Now run the command- elasticsearch.bat file inside the Windows OS, or run that file through the c- prompt. We can use the terminal to run the file Elastic search in the case of UNIX OS.

Step (5) We can use the port 9200 as default for the web interface of Elastic search. Otherwise, we modify it by altering the port http. port residing within the file with name elasticsearch.yml that exists within the DIR bin. Moreover, we can also verify that the server begins to work and run on the local host - http://localhost:9200 by browsing the site.

Related Article: Elasticsearch Installation

ELK STACK Training

- Master Your Craft

- Lifetime LMS & Faculty Access

- 24/7 online expert support

- Real-world & Project-Based Learning

Some essential stages of Elasticsearch

The below three steps are important ones for Elasticsearch.:-

- Indexing

- Searching

- Mapping

Indexing

Indexing is the method of adding data to the Elastic search, where the inserted data is stored within the (ALI) Apache Lucene Indexes. Later Elasticsearch will recover and save the data using the Lucene indexes. It is similar to the CRUD operations performed.

Searching

It is like a usual search query using a specific type. Further, the index will look similar to: "POST index/type/_search." If we go for particular search results, we use the Queries, Aggregation, and Filter the three different ways.

After downloading and installing Elastic search, we will download and install the two significant components, Kibana and Logstash.

Install Kibana

Step 1) Download the kibana.bat file, and to install it, reach out to the Kibana folder and the bin folder.

Step 2) Double-click the kibana.bat file to begin the Elasticsearch server.

Step 3) Let the Kibana server start.

Step 4) To check whether the Kibana server started working, type - localhost:5601 opening the browser.

Install Logstash

Step 1) After downloading the Logstash file, open the Logstash folder.

Step 2) Then, we follow the commands similar to Elasticsearch to install and run the Logstash.

First, we have to download Java, and the process is similar to the Elastic search we executed earlier.

Step 3) Installing Logstash is very simple on many platforms.

-For Windows OS, unzip the zip file downloaded to install Logstash.

-For UNIX OS, extract the file bnamed “tar”within any place and install the Logstash. The command will be:-

$tar -xvf logstash-5.0.2.tar.gz

Now for the LINUX OS, we can use the utility called APT.

After downloading, we must install the Public Signing Key (PSK).

wget -qO - https://artifacts.elastic.co/GPG-KEY-elasticsearch | sudo apt-key add -

Then we will go with installing the Debian package before moving further.

sudo apt-get install apt-transport-https

Now, we have to save the storage definition as follows.:-

echo "deb https://artifacts.elastic.co/packages/8.x/apt stable main" | sudo tee -a /etc/apt/sources.list.d/elastic-8.x.list

Now run the update and be ready to install the Logstash.

sudo apt-get update

sudo apt-get install logstash

Then, by using the utility named YUM for Debian Linux OS, we have to install the Public Signing Key. We have to download it.

sudo rpm --import https://artifacts.elastic.co/GPG-KEY-elasticsearch

Now we have to insert the below text file with a repo suffix into the directory we use.

[logstash-8.x]

name=Elastic repository for 8.x packages

baseurl=https://artifacts.elastic.co/packages/8.x/yum

gpgcheck=1

gpgkey=https://artifacts.elastic.co/GPG-KEY-elasticsearch

enabled=1

autorefresh=1

type=rpm-md

Thus, our storage is ready for use, and now we can start installing Logstash with this.:-

sudo yum install logstash

So, this is about installing all three components of ELK Stack. Let us know more about Elasticsearch, Kibana, and Logstash and their features and advantages.

Top 30 ELK Stack interview questions and answers for 2020

Importance of Log management

Log management is very important for any enterprise because we can monitor our systems 24/7. All the system events, transaction events, etc will be stored as logs. By reviewing these logs, we can know whether the system is functioning properly or not. We can observe if there are any unusual patterns in the logs. It will be helpful to quickly identify and analyze issues and take action accordingly. So we can stay ahead of any potential problems thereby preventing any disruptions to the systems involved.

ELK Stack Architecture

Here is the architecture of the ELK Stack that shows the end-to-end flow of logs,

Logs are the events generated by any system. The logs from various sources are collected and processed by Logstash. It will then send these logs to Elasticsearch which will store and analyze the data. Using kibana, the logs can be visualized and managed.

Logs are the events generated by any system. The logs from various sources are collected and processed by Logstash. It will then send these logs to Elasticsearch which will store and analyze the data. Using kibana, the logs can be visualized and managed.

Subscribe to our YouTube channel to get new updates..!

Comparison between ELK Stack and Splunk

Here are some differences between ELK Stack and Splunk,

- Splunk is a proprietary software whereas ELK Stack is open-source

- The forwarders in Splunk will configure by default with a variety of sources. In ELK Stack, Logstash can set with data sources by using plugins

- The query syntax for ELK Stack will be Lucene query language which most of the people are familiar with, and it will be easier to work with ELK Stack. The Splunk query

- syntax is something new - SPL(Splunk Processing Language) which is based on MapReduce and developed by Splunk, so it has to learn from scratch

- Both Splunk and ELK Stack store indices in the form on flat files

Advantages and disadvantages of ELK Stack Advantages

- Logs from various sources will be converting into a single ELK instance

- Get insights into your data at a single location

- Open-source and can installed on-prem

- Easy to scale up vertically and horizontally

- It supports different language clients for Python, Ruby, Java, .Net, etc

Disadvantages

- It might become difficult to handle the components when moved to a more complex setup with multiple nodes

- If the complex queries index size is larger than the allocated memory, it results in out of memory exceptions

Conclusion

Here we conclude this ELK Stack tutorial. Now you have come to know different data analysis methods and perform data searches using Elasticsearch, Logstash, and Kibana. ELK Stack is a complete suite of open-source tools that resolves centralized logging system problems. Elasticsearch is an analytics engine, Kibana is an open-source data visual tool, and Logstash is a popular pipeline tool. This tool suite gives crucial log file information that helps prevent loss and get good opportunities.

I hope you got all the basic information about ELK Stack through this article. You can comment if you get any queries further.

About Author

As a senior Technical Content Writer for HKR Trainings, Gayathri has a good comprehension of the present technical innovations, which incorporates perspectives like Business Intelligence and Analytics. She conveys advanced technical ideas precisely and vividly, as conceivable to the target group, guaranteeing that the content is available to clients. She writes qualitative content in the field of Data Warehousing & ETL, Big Data Analytics, and ERP Tools. Connect me on LinkedIn.

Upcoming ELK STACK Training Online classes

| Batch starts on 9th Mar 2026 |

|

||

| Batch starts on 13th Mar 2026 |

|

||

| Batch starts on 17th Mar 2026 |

|