AWS Devops Tutorial

Last updated on Jun 12, 2024

What do you know about AWS DevOps?

AWS DevOps is a combination of two tools: Development and Operations. AWS is a medium where it helps to promote the development and operational process. The main purpose of using AWS DevOps tools allows a single team to handle the collective software application lifecycle development, testing the software components, product deployments, and important operations. The advantage of using AWS DevOps helps developers to reduce any disconnection between the software developments, System administrations, and quality assurance (QA).

What is DevOps?

The following important factors will explain the concept of both DEV and Ops:

- DevOps helps to collaborate between the development and operations team and helps in the deployment codes to produce faster automation and repeatable ways.

- DevOps helps to increase the overall organization speed to deliver effective applications, products, or services. This will also help an organization to offer better services to the customers.

- DevOps can also be defined as a sequence of IT operations and software development lifecycle to offer better communication and collaborations with the end-users.

- DevOps tool is one of the most valuable business applications for any enterprise or organization. With the help of DevOps speed and quality of any application will be improved.

- DevOps is nothing but a type of methodology of making both developers and operations. Aws DevOps also represents any change in the IT culture to increase IT service delivery for the agile methodologies in the context of system-oriented approaches.

- DevOps is all about the development process operational integrations, so it increases 22% of total quality improvements and 17% frequency application development improvements.

Brief about DevOps History:

- In 2009, the first Amazon conferences were held in Ghent Belgium, and named this tool as DevOpsdays. Belgian consultant and Patrick Debois first founded the conference.

- In 2012, the state of DevOps report was first launched and conceived by Alanna Brown.

- In 2014 -> the first annual state of AWS DevOps report was published by 3 scientists namely Nicole Forsgren, Gene Kim, and Jez Humble. In the same year, the first DevOps adoption was found.

- In 2015, these 3 scientists founded DORA or DevOps research and assignments.

- In 2017, Nichole Forsgren, Jez Humble, and Gene Kim first published “Accelerate the building and scaling high-performance technology organization”.

We have the perfect professional AWS Devops Course for you. Enroll now!

Advantages of DevOps:

Below are the few key advantages of DevOps:

- With the help of DevOps, both the operation and development teams work well with complete isolation.

- Once you build, the testing and deployment operations will be performed well. Due to this reason, development teams consume more time in building the cycles.

- Without using DevOps, the software team members need to spend a large amount of time on phases like designing, testing, and deploying the software requirements.

- In DevOps, manual code deployment will reduce human errors in productions.

- Coding and operation teams will have their own separate timelines and reduce the delays.

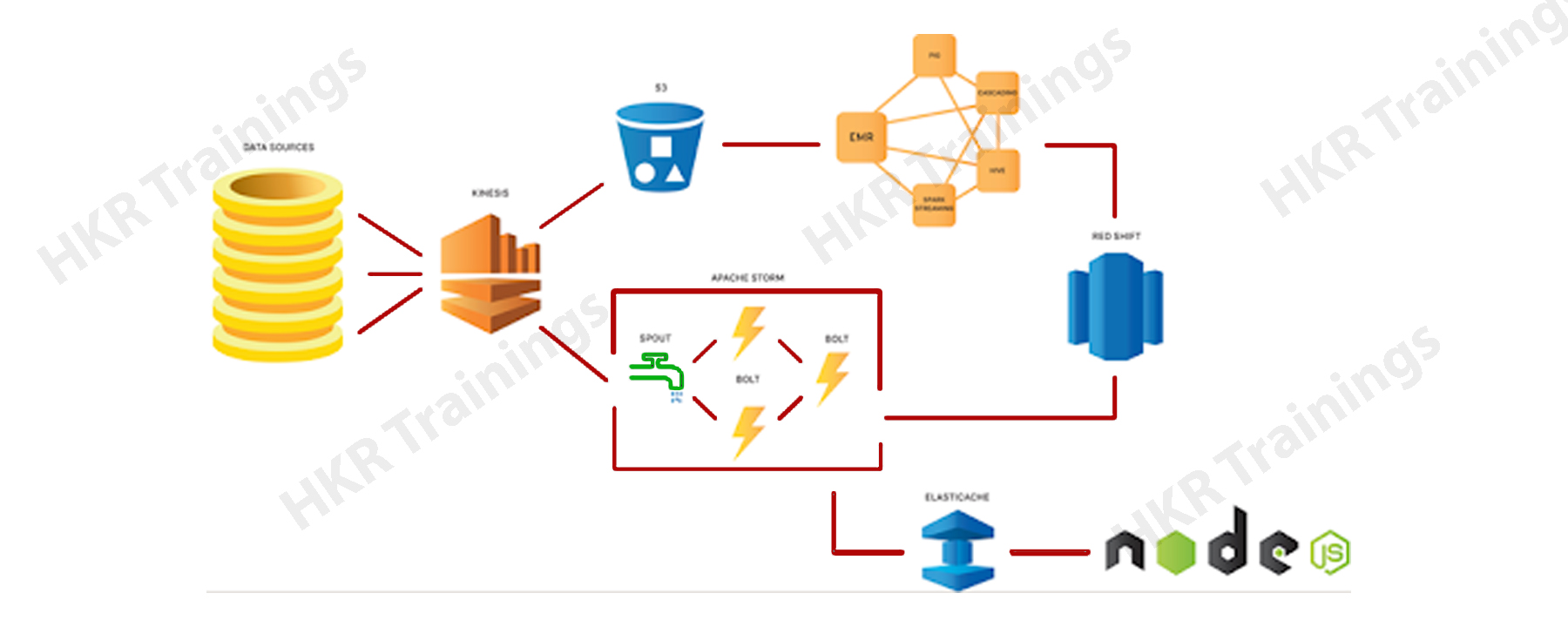

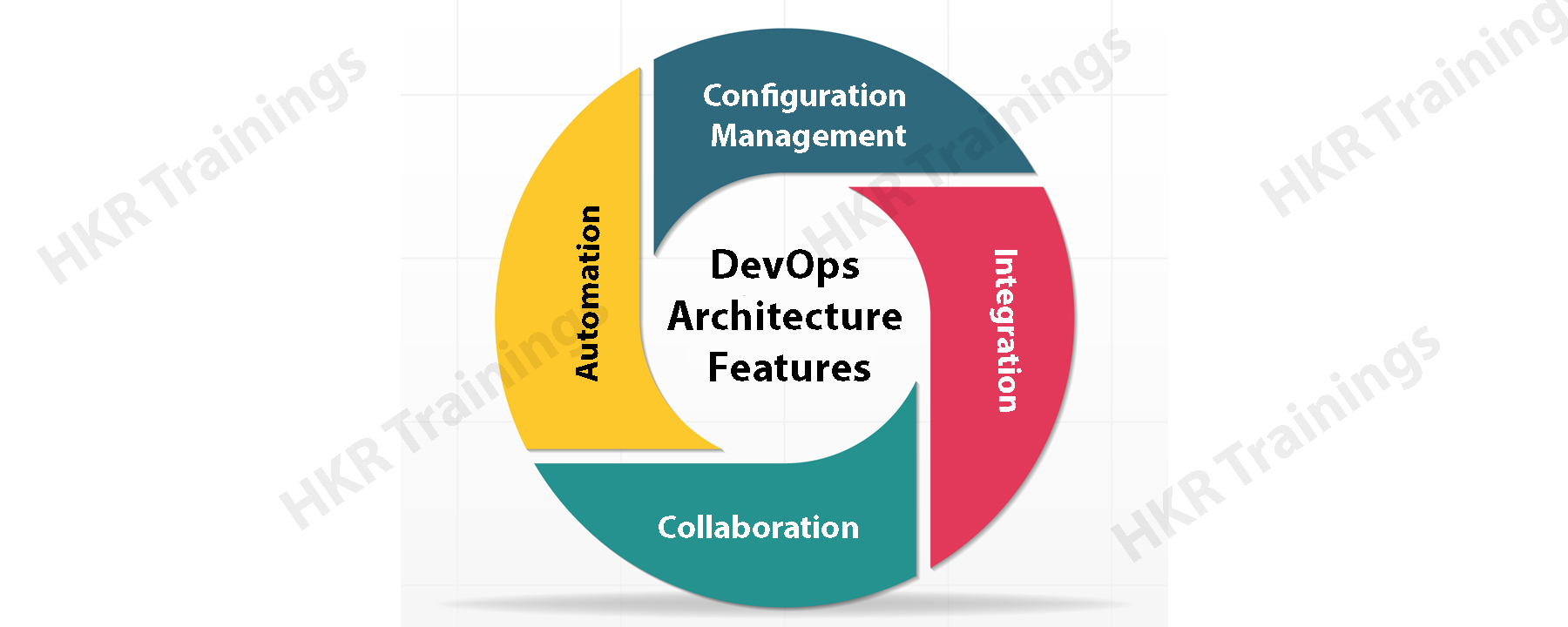

Aws DevOps architecture overview:

Aws DevOps architecture explains the key features, fundamental components, and basic functionalities of each component. The following figure explains this scenario:

Let me explain the components in brief:

1. Automation process:

The automation process helps to reduce the development time, during the time of testing and software deployment stage. Automation also helps to increase productivity and catch the bugs quickly. To get contiguous product delivery, each code will be defined through this automation test process, cloud-based automation service, and component builds.

2. Collaboration process:

As I said earlier DevOps means the development and operations team collaborated to form a DevOps application tool. This collaboration improves the cultural team model and the team becomes more productive. The collaboration strengthens ownership and accountability. So this makes the team share their responsibilities and work closely in full sync and you can also perform deployment in a faster way.

3. Integration process:

In any software environment set up, applications need to be integrated with other components. The integration process helps to combine the existing code with the new functionality. Both continuous integration and testing produce continuous development. The frequency in the microservices leads to perform significant operations, to overcome this hustle, both the continuous integration and delivery help to deliver the product in a quicker, reliable, and safer way.

4. Configuration management process:

This process ensures the application to interact with the resources that are concerned with the software development environment. The configuration files will not be created where the external application configuration separates from the source code. This type of configuration file can be written during the time of deployment or they can be loaded to the application at the run time, this depends on the software environment in which you are working on.

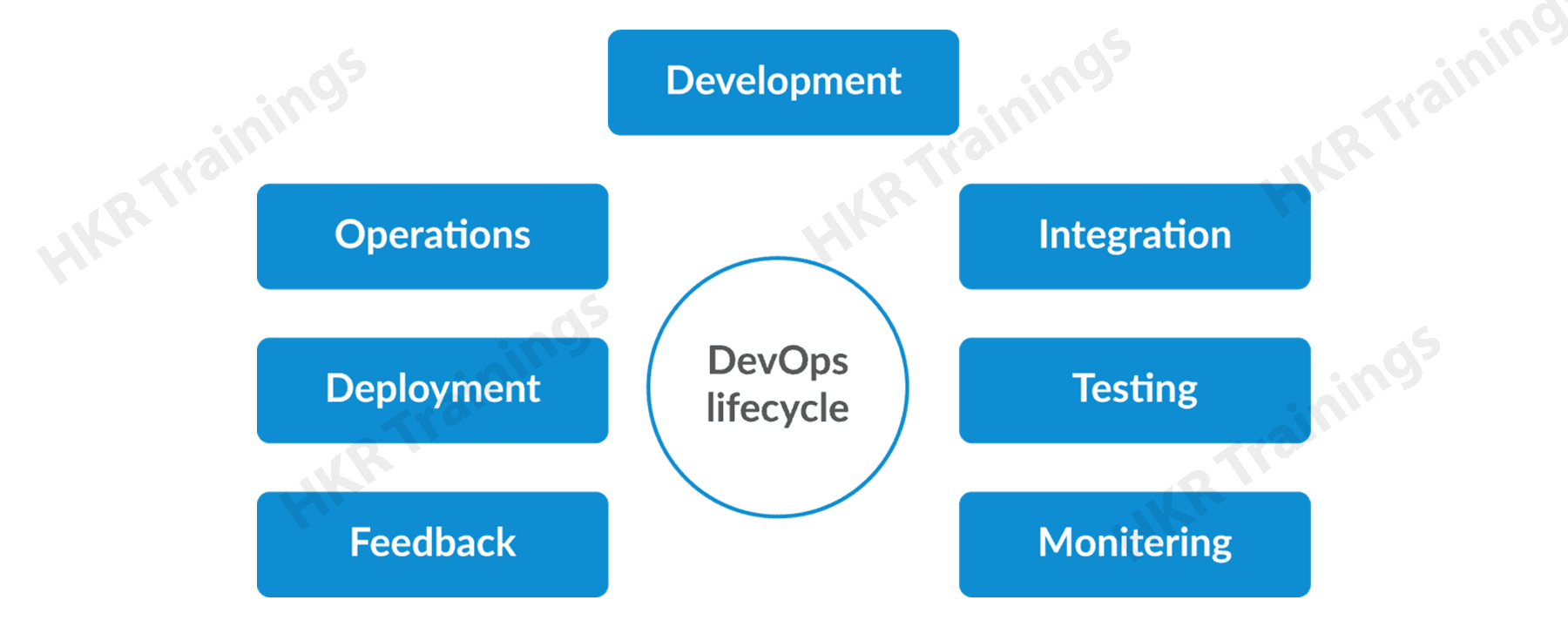

Aws DevOps Lifecycle:

DevOps lifecycle defines the agile methodology relationship between development and operations. This is a kind of process that is practiced by software developers and operational engineers together starting from the beginning stage to the last stage of the software development life cycle.

The following diagram explains the DevOps lifecycle process:

This Aws DevOps lifecycle includes the 7 phases:

1. Continuous development:

This phase of the life cycle involves the planning and software coding stage. Here the vision of the project will be decided during the time of the planning phase. The developers begin to develop the codes at this stage. One important point to be noted, no DevOps tools required for the planning process, but several tools are there to maintain the software codes.

2. Continuous integration:

This stage is considered the heart of the DevOps lifecycle. Continuous integration is nothing but a software development practice in which developers are needed to commit any changes related to source code. This is done on a weekly or daily basis. Continuous integration also helps to detect any problems or bugs in the early stage of the source code development. In this Continuous integration stage, you need to perform many testing techniques such as unit testing, code review, packaging, and integration testing.

3. Continuous testing:

In this phase, software testing experts are needed to perform continuous testing for detection, and bugs. There are lots of tools available such as TestNG, Selenium, and Junit, etc. These tools allow Quality assurance professionals to test multiple code-bases to ensure that there is no flow in the functionality. Here the Docker containers can also be used for stimulating the test environment.

4. Continuous monitoring:

Monitoring is an important phase that involves all the major operational factors that can be carried in the entire DevOps lifecycle process. In this stage, important software development information will be recorded and processed carefully. The monitoring process will be integrated into the operational capabilities of software development applications.

5. Continuous feedback:

The application development process is consistently improved by analyzing the results from the software operations. This continuous feedback process is carried out by critical phase information and constant feedback related to operations and the development of the next version of the software application.

6. Continuous deployment:

In this phase of the DevOps lifecycle, the entire software code is deployed to the production server. Also, this is an essential stage to ensure the code is correctly used on all the server layers. Here the new codes are continuously deployed and also configuration management tools play a vital role while executing the tasks quickly and frequently. Here are some popular tools that are used : Chef, Ansible, SaltStack, and Puppet.

7. Continuous operations:

All DevOps operations are done on the basis of the continuity with a fully automated release process and allow any organization to accelerate the overall time to market continuity. It is also clear that continuity is a critical factor in the Developments and operations. This process takes a long time to detect any issues and produce a better version of the final product. With the help of Aws DevOps. We can create any software product more efficiently and improve customer satisfaction.

AWS Devops Certification Training

- Master Your Craft

- Lifetime LMS & Faculty Access

- 24/7 online expert support

- Real-world & Project Based Learning

DevOps workflow and principles:

DevOps workflow offers a visual overview of the sequence with the help of input given. This workflow also helps a developer to know which action is performed and the valid output will be produced for an operation process.

The below diagram explains this workflow functionality:

.jpg)

DevOps workflow provides an ability to separate and arrange the appropriate jobs which are requested by the users. Also, it acts as a mirror of their process in the configuration process.

DevOps principles:

The main principles of Aws DevOps are developed to offer continuous delivery, fast reaction, and automation processes.

Let me explain them one by one:

1. End to end software development responsibility:

DevOps team needs more performance support until the end of the product release. This increases the responsibility and the quality of the software products.

2. Continuous improvement process:

DevOps development culture mainly focuses on continuous software improvement to minimize waste. This stage continuously speeds up product growth and improves the service offered.

3. Automate everything: Automation is a vital principle of the DevOps process and this is for the software development and infrastructure landscape.

4. Custom centric actions:

DevOps team always focuses on producing the customer-centric and enables users to invest in products and services.

5. Monitor and test the products everything:

The DevOps team needs to provide robust monitoring and testing of the procedures.

6. DevOps teams work as one team:

In the DevOps work culture, roles like designers, developers, and testers are already defined. To produce an efficient outcome, all they need to work as one team.

All these DevOps workflow principles can be achieved through several Development and operation practices. I would like to mention a few practices:

- Self-service configuration

- Continuous building the product

- Continuous software integration

- Continuous product delivery

- Offering Incremental testing

- Automation of provisioning

- Automated release management

DevOps tools and features:

DevOps application comes with advanced tools and also its features are helping us to work on developing an effective software product.

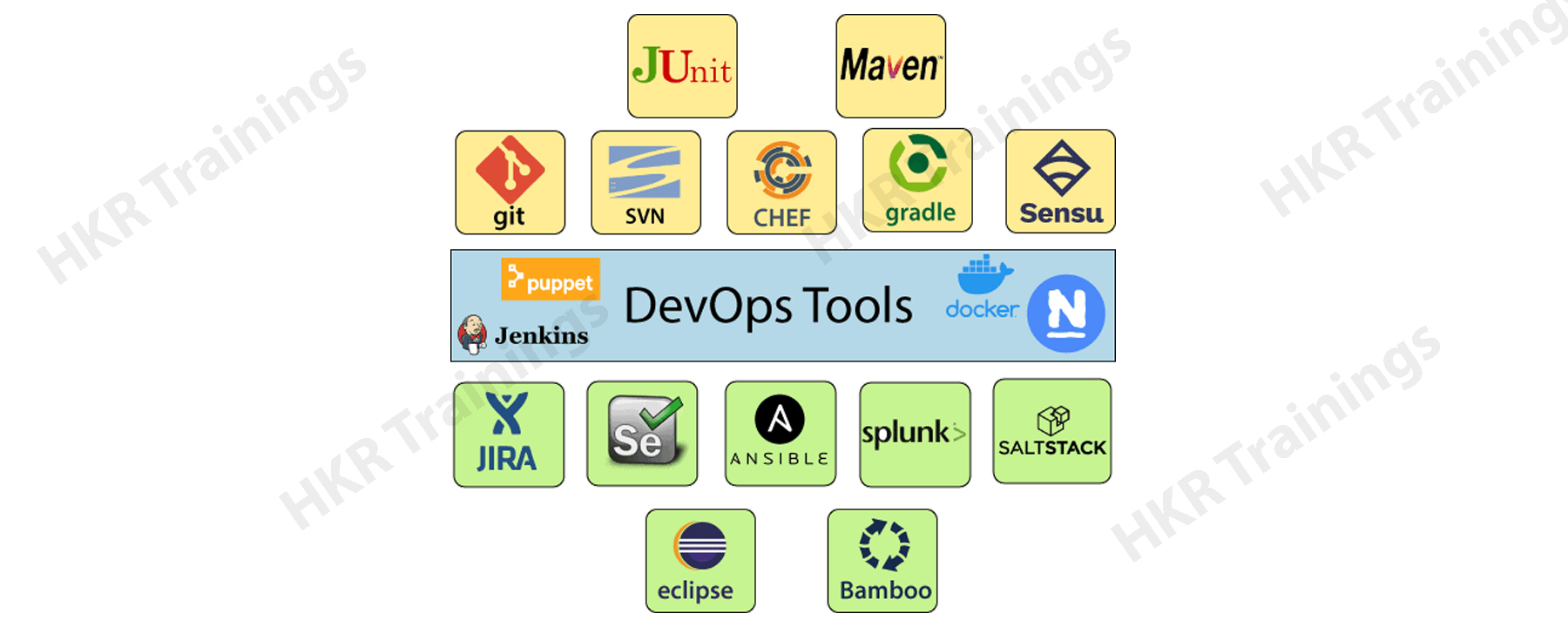

The following diagram explains the important tools:

1. Puppet:

Puppet is one of the popular tools and widely used in the market. This tool allows the delivery and release date of the technology that changes frequently and quickly. The puppet tool consists of features like versioning, automated testing, and continuous product delivery.

Features of Puppet:

- Offers real-time context reporting.

- Modeling and managing the entire environment.

- Defined and continuity infrastructure.

- Supports desired state detections and bug-free errors.

- This tool inspects and reports on libraries that are running through the infrastructure.

- This eliminates any manual work during the time of the software delivery process.

- This tool helps the software developer to deliver great software products quickly.

2. Ansible:

Ansible is one of the leading DevOps software tools. This is an open-source IT engine that helps in the automation of application deployment, intra service software orchestration, and cloud provisioning. The main purpose of using this tool is to perform scale automation and speed up productivity. The Ansible tool is very easy to deploy the software products without using any agents or custom security activity on the client-side and also pushing the data modules to the clients.

Features:

- This is very easy to deploy open source applications.

- This tool also helps in avoiding the complexity in the software development process.

- Ansible eliminates the repetitive kind of tasks.

- It also manages complex software deployments and speeds up the software development process.

3. Docker:

Docker tool is a high-end DevOps application used in building, run-distributed, and shipment of any services on multiple systems. This also helps in the assembly of the apps quickly from the different components and is best suited for container management.

Features:

- This tool helps to configure the software systems in a more comfortable and faster way.

- Docker tools also increase productivity.

- It also provides the containers that will be used to run the application in an isolated environment.

- Docker tool also routes the incoming request to publish the ports on available nodes to the container. This feature also enables the connections even if there is no task running on the container node.

- It allows a developer to save the secrets into the swarms.

4. Nagios:

Nagios is one of the more useful tools available in DevOps. This tool also determines the errors and rectifies them by using networking, server, log monitoring, and server system.

Features:

- It offers complete desktop monitoring and server operating systems.

- The network analyzer helps to search for the bottlenecks and optimizes any bandwidth utilization.

- Nagios also helps to monitor various components such as applications, operating systems, network protocol, and applications.

- This tool also helps to monitor the Java Management Extensions.

5. CHEF:

A chef tool is useful for achieving speed, consistency, and scalability. This is a cloud-based system and open-source software technology. Chef uses the Ruby encoding to develop the essential blocks like recipes and cookbooks. The Chef tool is mainly used in the automation of infrastructure, reduces manual activity and repetitive tasks for software infrastructure management.

Features:

- Chef tool maintains high availability.

- It also manages multiple cloud environments.

- This also uses the ruby programming language to create a domain specification

- The chef tool does not make any assumptions related to the current node status. This also uses various mechanisms to know the current status of the machine.

6. Jenkins:

Jenkins tool is developed for monitoring the repeated task execution. Jenkins is a type of software that allows users to perform continuous integration. Jenkins tools can be installed on a server-side central build.

Features:

- Jenkins helps to increase scalable automation.

- This can easily configure and set up the system through a web interface.

- This tool can distribute multiple tasks across different machines and increases concurrency.

- Jenkins tool also offers continuous integration and continuous delivery.

- It consists of more than 400 plugins used to build and test any project virtually.

- It just required a little maintenance and consisted of various GUI tools.

7. Git:

Git is an open-source software distributed version system that is freely available for all users. This tool is designed to handle major as well as minor projects with better efficiency and speed. This is developed to coordinate different tasks among programmers.

Features:

- This is a free open source tool.

- It allows multiple distributed developments.

- This tool supports the pull request type.

- It also supports the faster release cycle.

- GIT is a very scalable tool.

- This is a very secure tool to use.

8. SALT STACK:

SALTSTACK is a lightweight DevOps tool. This tool also shows various tasks like real-time error queries, logs, and workstation activities. SALT STACK is also a software-defined data center.

Features:

- It eliminates the messy configuration and data changes.

- This also traces the detail of all web request types.

- This tool also allows us to fix any type of bugs and find errors before production.

- SALT STACK also offers secured access and configures the cache images.

- It also secures multi-tenancy with role-based access control management.

- Flexible image management tool with a private registry to manage and store various images.

Subscribe to our YouTube channel to get new updates..!

9. Splunk:

Splunk is a tool used to make any machine data usable, valuable, and accessible to everyone. This also delivers operational intelligence and secured system development.

Features:

- This tool has a next-generation analytical and monitoring solution.

- It delivers a unified, single view for different IT services.

- Also extends the Splunk platform with purpose-built solutions.

- Offers data-driven analytical features with actionable insights.

10. Selenium:

Selenium is one of the popular software testing frameworks used for web applications. This also provides an easy interface to develop automated tests.

Features:

- This is a free open source tool.

- It also offers a multiplatform for various testing platforms such as Android and IOS.

- This is very easy to build a keyword-driven web framework mainly for Web drivers.

- This tool creates a robust based regression automation process and test scripts.

DevOps Automation overview:

When it comes to production, testing plays an important role in any software development cycle. In this testing phase, we mainly focus on automation testing. Why do we need automation testing? Because automation testing is a crucial need for any DevOps operations and also automates every fundamental DevOps practice. The automation process starts from code generation to the final product delivery process. The main reason to use the Automation process is to boost up the speed, maintain consistency, accuracy, reliability, and also increase the number of service deliveries. Automation works everything from building applications, deploying, and monitoring the developme

Important DevOps Automation tools:

Automation in the DevOps application helps to maintain massive IT development infrastructure, and this can be classified into six major categories. They are;

- Infrastructure automation

- Configuration management tool

- Deployment automation tool

- Performance management tool

- Log management tool

- Monitoring tool

Below are the important details about each automation tool:

1. Infrastructure Automation:

In this category, one important tool comes under such as Amazon web service (AWS). This is one of the popular cloud-based services and with the help of this; you no need to be physically present in any data center. Infrastructure management is easy to scale, and no upfront hardware costs. This automation tool is configured to offer the only server-based services automatically.

2. Configuration management tool:

In this category, we are going to use Chef Tool to achieve the speed, scalability, and consistency of the final product. This is also used to ease any complex task and configure the management system. With the help of this Chef tool, DevOps teams can avoid any sudden changes across multiple servers.

3. Deployment automation tool:

In this category, we are going to make use of one of the automation tools that is Jenkins. This tool is used to perform continuous integration and testing tasks. It helps to integrate any project more efficiently by finding bugs or issues when you deploy the project.

4. Performance management tool:

Here we use the App dynamic tool, which offers real-time performance monitoring support. The data collected with the help of App dynamic will provide developers to debug any issue immediately.

5. Log management tool:

Splunk = this development and operation tool helps to solve the issues like storing the data, aggregation, and analyzing the logs.

6. Monitoring tool:

Nagios= this tool helps to notify people when your IT infrastructure and related servers go down. Nagios is an important tool that helps DevOps tools finds any problems and also correct them.

DevOps VS Agile:

Now it’s time to know the major differences between DevOps and Agile methodologies:

First, we will discuss DevOps:

DevOps:

- DevOps is an application tool used in the development and operation process.

- DevOps is used to manage any end-to-end engineering process.

- DevOps mainly focuses on constant testing the software applications and end product delivery.

- This DevOps usually consists of a large team size and also holds stockholders.

- The main focus of DevOps is on collaboration and does not consist of accepted web frameworks.

Agile:

- Agile is a continuous iterative approach and mainly focuses on collaborating teams, feedback from customers, and rapid release.

- The Agile methodology focuses on Constant changes in the development life cycle.

- The main purpose of using an agile methodology is to manage any complex project.

- Any Agile team consists of fewer members when compared to DevOps. As smaller is the team, they can work faster and move to other processes.

- Agile can be implemented within tactical frameworks, the frameworks included are Safe (Scaled agile frameworks), Scrum, and Sprints framework.

Conclusion:

Aws DevOps is just a part of the development and operation process. from this Aws DevOps, you can master the concepts like automation tools, infrastructure, frameworks, and workflow. Do you really think learning only the Aws DevOps tool will make you a good AWS expert? The answer would be no. Because this tutorial will only help in understanding and implementing the DevOps application and if you want to be an AWS cloud expert, please go through our AWS Tutorial blog designed under the guidance of the SME’s team.

Related Articles:

What is AWS?

AWS stands for Amazon web service; this also acts as a platform which uses different distributed IT infrastructure to offer different IT demands. The AWS services were first developed by Amazon Software Company, and this platform provides services like infrastructure as a service (IaaS), platform as a service (PaaS), and Software as a service (SaaS). This AWS platform is also known for its cloud computing service and with the help of this technology, many organizations take overall benefits of reliable IT infrastructure. AWS also supports many programming languages such as NodeJS, Python, Java, C#, R, and Go. This platform is also a compute service that helps the programmer to run their code without any provisioning or managing software servers.

Now it’s time to know the history of AWS:

AWS evolution:

In this section you will be learning the history of AWS and its features:

1. 2003: In the year 2003, two engineers namely Chris Pinkham and Benjamin Black designed a paper (You can call it Blueprint) which explained how this Amazon’s own internal computing infrastructure looks like. First they decided to sell it as a service and then prepared a business case design on it. They decided to prepare six-page documentation and decided to proceed with this final documentation.

2. 2004: In 2004, Amazon Company developed a first service level platform known as SQS stands for “Simple queue service”. The development team launched this service in South Africa (Cape Town).

3. 2006: In the year 2006, AWS (Amazon web service) was officially launched as a service platform.

4. 2007: In the year 2007, more than 180,000 developers had signed up for the AWS platform.

5. 2010: In 2010, Amazon. Com retail web services were first moved to the AWS. For example, Amazon.com is now running on AWS.

6. 2011: in this year, AWS suffered from severe problems like the volume of EBS (Elastic block store) was stuck, some important parts were unable to read and write requests. Sometimes it took two days to solve the problem.

7. 2012: In 2012, AWS hosted the first customer event known as the $6 billion per annum. Revenue reaches more than 90% every year.

11. 2016: By 2016, AWS revenue doubled and reached more than $13 billion USD/annum.

12. 2017: In 2017, AWS service reinvent the release host for artificial intelligence services and AWS revenue doubled to more than $27 billion USD/Annum.

13. 2018: in 2018, AWS supports a machine learning specialty certs. This time AWS focused on artificial intelligence automation and machine learning.

AWS Architecture:

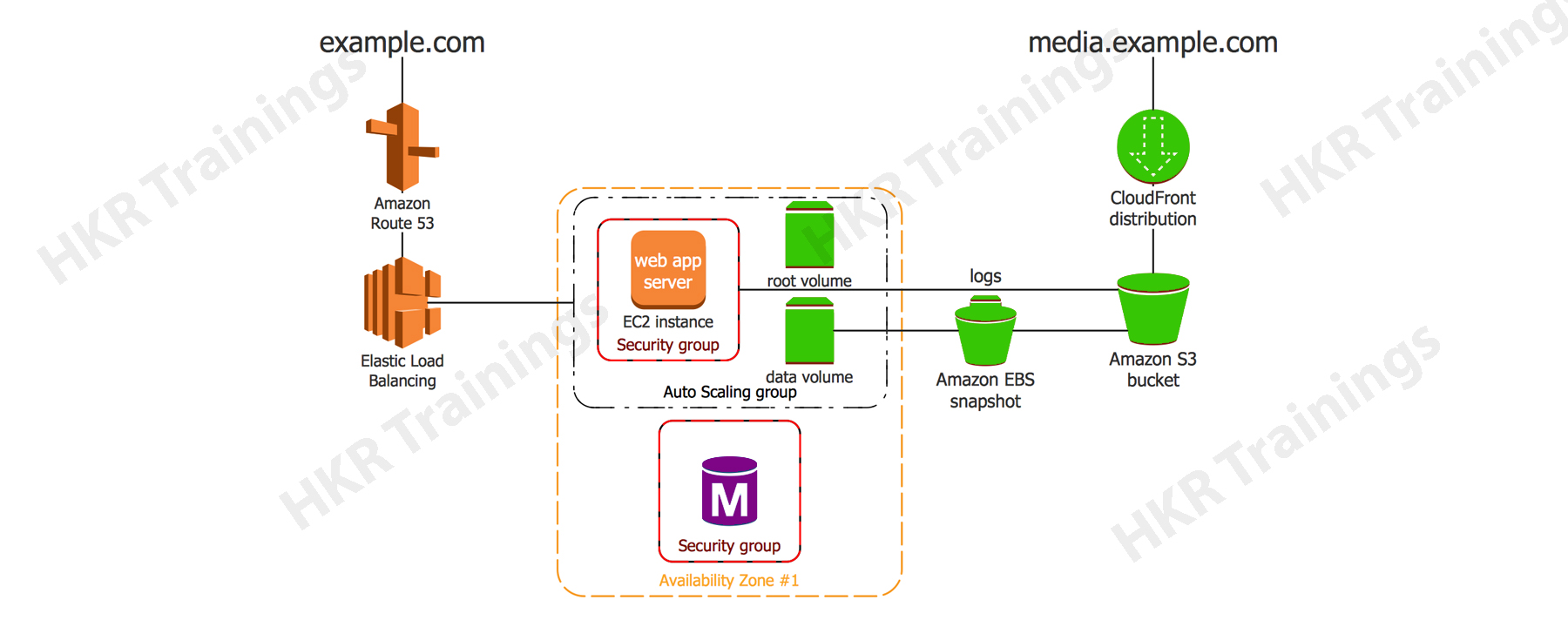

The following AWS architecture explains the work nature and feature of the platform:

The above architecture considered as a basic structure of AWS EC2. EC2 is called an Elastic compute cloud which will allow the clients to configure their project and method as per the requirements. There are several options available in AWS such as pricing options, configuration server, and individual server mapping, etc. S3 is also present in the AWS architecture (S3 means simple storage services). With the help of this S3, users can retrieve the data or store the data through various data types using application programming interface calls. There will be no computing element services available for the AWS services.

We have the perfect professional AWS Training course for you. Enroll now!

Components of AWS architecture:

The following are the important components of AWS architecture:

1. Load balancing:

The load balancing is one of the components in the AWS architecture that helps to enhance the various applications and the server’s efficiency. According to the AWS diagrammatic representation, this hardware load balancer is mostly used as the common network appliance and supports many to perform skills in the traditional web applications.

2. Elastic load balancer:

This elastic load balancer component easily shrinks and increases the overall capacity of load balancing by tuning traffic demands and supports sticky sessions to have advanced routing services.

3. Amazon cloud front:

This AWS component most widely used for content delivery and offers effective website delivery. The web content in the Amazon cloud front can also be available in different forms such as static, dynamic, and streaming content. This amazon cloud front content makes use of global location as well.

4. Elastic load balancer:

The elastic load balancer is mainly used to deliver the traffic to the web-based services and also helps to improve the performance in a large manner. The elastic load balance increases the growth in a dynamic way and sometimes the capacity can be shrunk based on certain traffic conditions.

5. Security management:

This security management offers many security features known as security groups. The security management system also works as the inbound network firewall and consists of ports, protocols, and source IP addresses. With the help of the IP address, the security groups limit the access to EC2 instances effectively.

6. Elastic Cache:

Amazon Elastic Cache is known for its efficiency, where the memory cache can be managed with the cloud. This elastic cache plays a vital role in terms of memory management and also helps to reduce the service load. The elastic cache component also enhances the performance and scalability of the tier of the database system.

7. Amazon RDS:

This Amazon relational database service system helps to manage and deliver the same access of data which is similar to My SQL, or Microsoft SQL. The application queries and tools will be more useful in the Amazon relational database system.

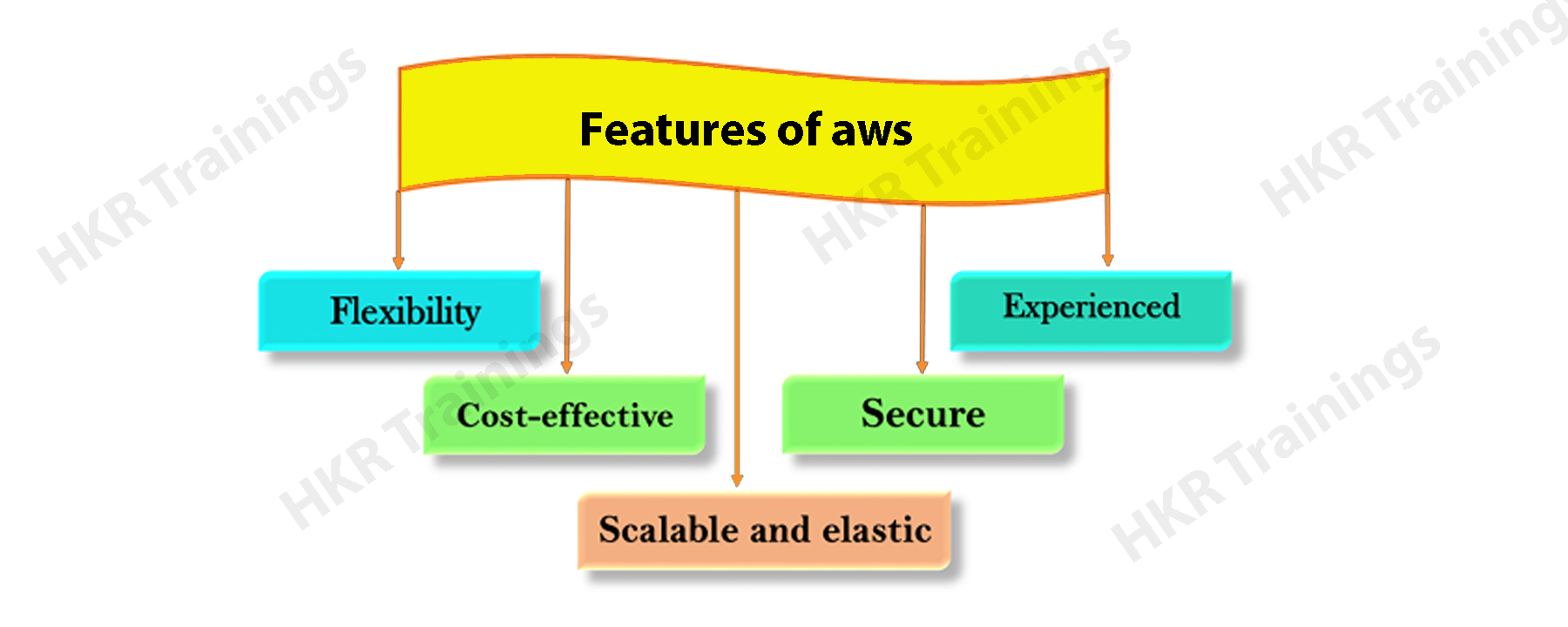

AWS features:

The following are the important features of AWS:

1. Flexibility:

This is one of the crucial features of AWS when compares to other cloud technologies. Before traditional IT models used to deliver any IT infrastructure solution, that require massive investment in many areas such as architecture building, installing programming language software, and operating system. When it comes to investment, they are valuable, time requirements, and adopting new technologies. These requirements slow down business operations.

The flexibility of AWS service allows user to choose the type of programming models, software programming languages, and operating systems which are well suited for the projects. So there is no need to learn new technologies. Building an application in AWS is like building any application using existing hardware resources.

2. Cost-effective:

Cost is one of the most important factors that need to be considered in delivering IT solutions. The cloud service provides on-demand IT infrastructure that can be of costs, power rate, real estate billing, and staff. So we can say that the cloud offers flexibility by maintaining the right balance of resources. AWS offers no upfront investment, minimum spend, and long-term commitment. So that users can scale down or scale up the resources increases or decreases respectively.

3. Scalable and elastic:

In traditional infrastructure development, scalability and elasticity were calculated with business investment and infrastructure during the time of cloud development. This type of scalability supports savings and improves the return on investment. Scalability in AWS has the ability to scale the computing infrastructure resources and elasticity in AWS is defined during the time of incoming application across multiple targets such as Amazon EC2, containers, IP addresses, and lambda functions.

4. Secure:

AWS offers a scalable cloud-computing system this helps customers with effective end-to-end security and also end-to-end privacy. AWS incorporates the overall security into its services, and documents used to describe how to make use of security features. AWS services maintain confidentiality, data integration, and availability of resources. In AWS, you can see one type of security that is Physical security, physical security means securing the data centers to prevent any type of unauthorized data access.

5. Experienced:

The AWS cloud provides a high level of scalability, security, privacy, and reliability. Usually, AWS has built an infrastructure based on lessons learned from over twenty years of experience to manage the multi-billion dollar amazon.com business. Amazon helps customers by increasing their infrastructure capabilities.

Why AWS is used?

There are lots of advantages of using AWS service; I would like to mention a few of them:

1. The AWS service gives a world-class performance at an hourly rate and 90% of the time traditional hardware is not used by bigger companies.

2. During the peak hours, there is a chance that hardware may not be sufficient in providing competent services. Hence most of the big organizations had shifted to AWS cloud.

3. Companies need not take over the maintenance and the cost involved with it. No matter how much demand there is, AWS can scale to that level.

4. AWS is also helpful for big data analytics. In AWS code deployment can be achieved continuously as DevOps processes are expertly supported and you can pay for using services as the user consumes.

5. In AWS there is a free tier account available, user can also open an account and start taking advantage of services available for 750 free hours.

AWS lambda :

AWS Lambda is one of the software service platforms, which offers serverless computing that performs computing without the use of any server code. Any codes which are using in programs can be executed on the base of AWS events. There are lots of AWS services available such as adding/removing files in AWS S3 buckets, helps to update the Amazon dynamo DB tables, and also access HTTP requests from the Amazon API gateway, etc. AWS Lambda supports many programming languages such as NodeJS, Python, Java, C#, R, and Go. AWS Lambda is a compute service s that lets the programmer run their code without any provisioning or managing software servers. AWS lambda always executes the codes only when needed and scales automatically, from a few server requests per day to hundreds/ thousands per second. User needs to pay only for compute time that you consume and important thing is that no charge when the programming codes are not running. To work with AWS lambda, the user needs to push their codes in AWS lambda services. This service can take care of tasks, resources like infrastructures, operating systems, maintenance of the server, code monitoring, logs, and security.

AWS lambda Architecture:

AWS lambda architecture explains the nature of a serverless platform. Let’s discuss the architecture in brief:

AWS lambda architecture is designed on the basis of big data implementation. Now let’s learn the different layers of AWS lambda architecture,

1. Batch layer:

This layer is a gold standard, which consists of sources of data, and every bit of information. Batch layer appended with new incoming data. This batch layer depends on high-quality services, which are capable of processing a large amount of data and also helps to maintain the system serverless platforms. The batch layer also helps to run predefined queries and also performs many mathematical operations such as aggregations, computations, and derived metrics.

2. Serving layer:

This serving layer is one of the complex layers of the AWS Lambda platform. The serving layers are cleverly indexed and offer low latency querying against other layers. The serving layer provides the ability to query the data in speed and this is considered to be the hardest bit to achieve in AWS lambda architecture.

3. Speed layer:

In this layer, where the tiny snapshots of data will be stored and the speed layer supports the processing and analysis of real-time data stream. This layer helps users to get the implementation details, and also process the derived data sets.

In brief, the batch layer maintains a historical store of derived data sets; the serving layer provides key-value access to derived metrics and always keeps data ready for analysis, machine learning, and visualizations. Finally, the speed layer allows the user to perform everything that the previous layers do, and the user can also preview the implemented data sets.

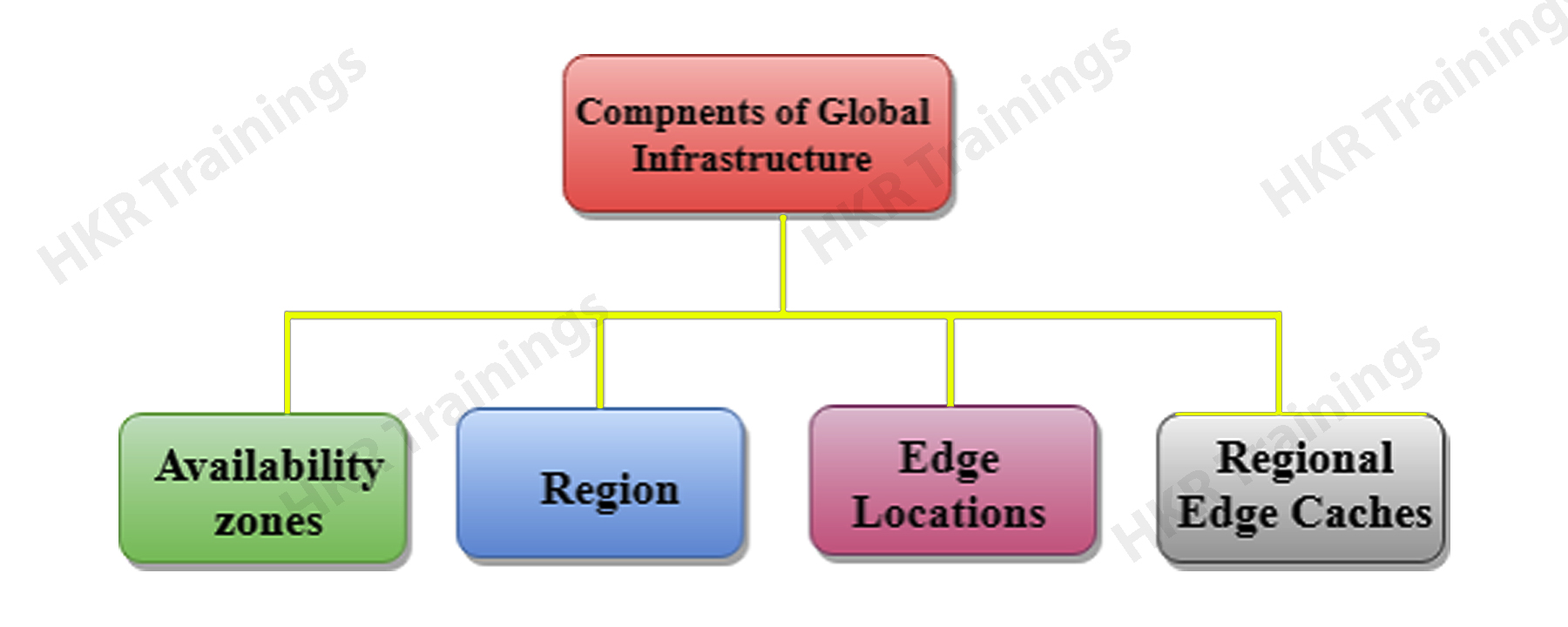

AWS – Global infrastructure

As I said earlier, AWS is a cloud product that is available globally. Global infrastructure is a region where the AWS cloud is based. This infrastructure consists of high-level services.

Below are the important components used in global infrastructure:

1. Availability zones

2. Region

3. Edge locations

4. Regional edge caches

1. Availability zone as a data center:

An availability zone is a kind of facility that can be available somewhere in a country or in the city. In this facility zone, there is a large number of components such as multiple servers, switches, firewalls, and load balancing. These things interact with the cloud site platforms inside the data centers. An availability zone can consist of several data centers, if they close together, they will form one availability zone.

2. A region:

A region is a geographical area; each infrastructure region consists of 2 availability zones. And a region is a collection of data centers that are isolated from another availability zone and connected through the link.

3. Edge locations:

An edge location is an endpoint for the AWS service product and mainly used for caching content. An edge location mainly consists of the cloud front, and Amazon’s content delivery network (CDN). Each location in the global infrastructure is more than a region. Currently, you will get more than 150 edge locations. In AWS edge location is just a region, but a small location. This type of Edge location is mainly used for caching the data contents.

4. Regional Edge Cache:

In November 2016, Amazon web service announced a new type of edge location that is known as a regional edge cache. This regional edge cache lies between cloud Front origin servers and the edge locations. In this regional edge location, data is removed from the cache, where the data is retained at the regional edge caches.

AWS environmental setup:

Before you get into learning how to work with AWS, we are going to explain how to set up the AWS environment. First, you need to log in with the Amazon console software application. This Amazon console supports two data integrated environments such as Visual Studio and Eclipse.

Let’s learn the setup step by step:

Steps involved are;

1. Creation of login in AWS console application:

User can create their login details in AWS console login as a free member using Amazon free tier options. The following steps explain how to create login details using the AWS console.

Step1: First visit the website link https://aws.amazon.com/free/ in order to create your account. Fill all the mandatory fields in the form displayed and click the create account button and continue.

Step2: Enter your payment details and the next step is the mobile verification.

Step3: Once your number is verified an account activation link will be mailed to your email id.

Step4: Now click the mail link and enter the correct account name or email id and the password, login details to the AWS service software as shown below,

The AWS account name will be displayed at the right corner as shown in the above figure. Now you can start using the AWS services. The languages it will be supporting are NodeJS, Python, Java, C++, and C#.

AWS storage services:

S3 stands for simple storage service and this is the first-ever service produced by Amazon web service. S3 file is considered to be the safest place to store the data files. S3 is object-based storage; here you can store the images, pdf files, and Word files, etc. The S3 file storage value can range from 0 bytes to 5Terra byte. Usually, files are stored in Bucket. A bucket is like a folder that is used to store the files. S3 is a universal namespace that contains a DNS address, unique bucket name, and unique DNS address.

If you want to create the bucket, you have to use the following bucket:

https://s3-eu-west-1.amazonaws.com/acloudguru

Where s3-eu-west-1 is the region name and acloudguru is the bucket name.

Functions and concepts of S3:

1. This simple storage service allows unlimited storage of objects or files containing 1 byte to 5 gigabytes each.

2. Objects consists of storing the raw object data and metadata.

3. Objects are stored and retrieved using a developer – assigned key.

4. Data are kept secured from unauthorized access through an authentication mechanism.

5. Objects can be made available to the public by the HTTP or BitTorrent protocol.

Advantages of Amazon S3:

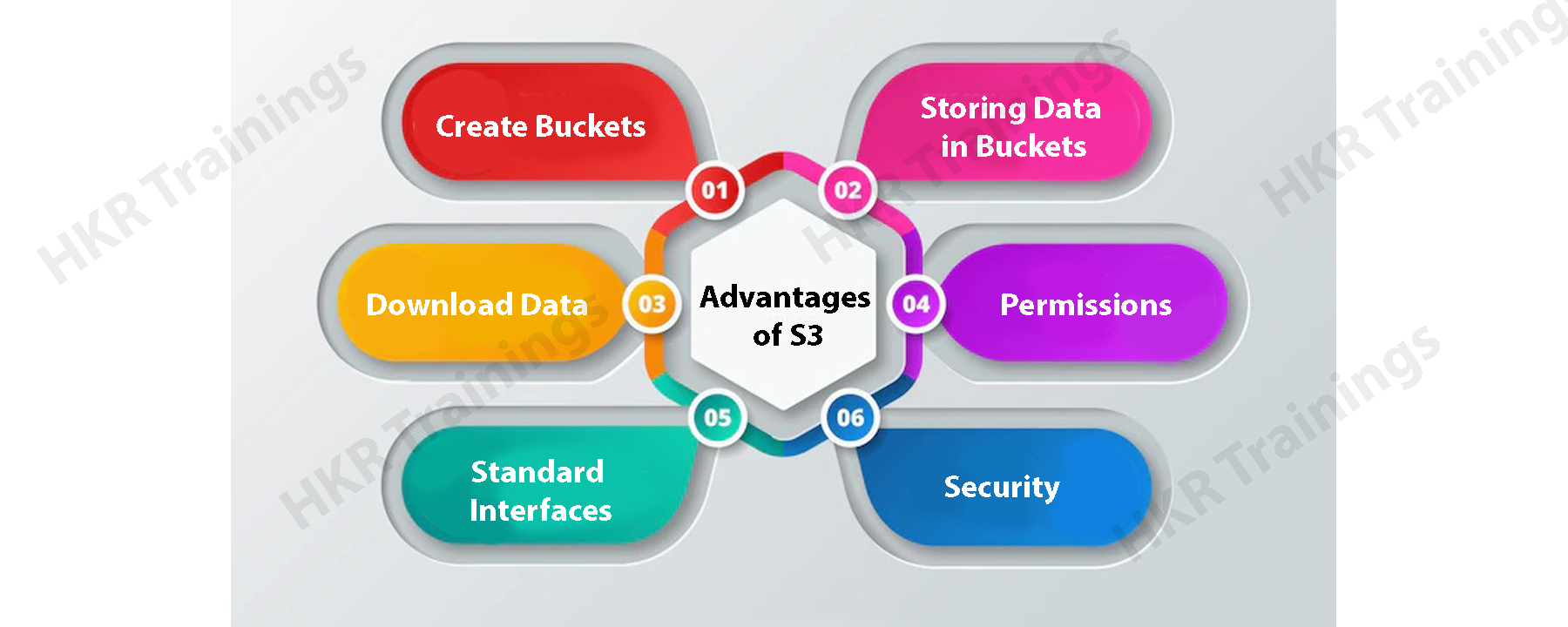

The below diagram explains the major advantages of Amazon S3:

1. Helps in the creation of buckets:

In S3 firstly we are able to create a bucket and provide a name to the bucket. Buckets are nothing but containers in a Simple storage service that helps to store the data. The bucket must hold a unique name to create a unique DNS address.

2. Supports to store the data in buckets:

The bucket can be used to store an unlimited amount of data. So that you can upload the files (Here you can upload an infinite amount of files) into an Amazon S3 bucket. Each object can be stored and retrieved with the help of a unique developer assigned-key.

3. Helps to download data:

With help of Amazon S3, user can also download your own data from an Amazon bucket and also give permission to others while downloading the same data. Here you can download any amount of data at any time.

4. Permissions:

You can also get permission (grant or deny access permission) to any users who want to upload or download the data from the Amazon S3 bucket. The authentication mechanism keeps the data that is secured from unauthorized access.

5. Standard interfaces:

S3 is used with standard interfaces like REST and SOAP. These interfaces are designed in such a way that they can work with development tool kits.

6. Offers better security:

Amazon S3 offers better security features which are used to protect unauthorized users from accessing the data

Conclusion:

In this AWS (Amazon web service) tutorial, you will be able to learn the definitions, advantages, architecture, environmental setup, storage services, and creation of your own Amazon web service. Aws is one of the Amazon cloud service products and offers the distributed IT infrastructure to work with different IT resources. This provides the service like Infrastructure as a service (IaaS), Platform as a service (PaaS), and Software as a service (SaaS). This is one of the popular AWS products and allows different organizations to take utmost advantage of reliable IT infrastructure. I hope this article may help a few of you to learn and become a master in this Amazing service tool.

Related Articles:

About Author

Ishan is an IT graduate who has always been passionate about writing and storytelling. He is a tech-savvy and literary fanatic since his college days. Proficient in Data Science, Cloud Computing, and DevOps he is looking forward to spreading his words to the maximum audience to make them feel the adrenaline he feels when he pens down about the technological advancements. Apart from being tech-savvy and writing technical blogs, he is an entertainment writer, a blogger, and a traveler.

Upcoming AWS Devops Certification Training Online classes

| Batch starts on 24th Feb 2026 |

|

||

| Batch starts on 28th Feb 2026 |

|

||

| Batch starts on 4th Mar 2026 |

|